Context

Yesterday (26/02), various news reports about a Willy Wonka-inspired experience in Glasgow started to circulate on social media. The articles, from outlets such as BBC News, Glasgow Live, and STV News, refer to ‘furious’ families who attended the underwhelming event.

This included reports of the police being called as visitors deemed the event a ‘farce’:

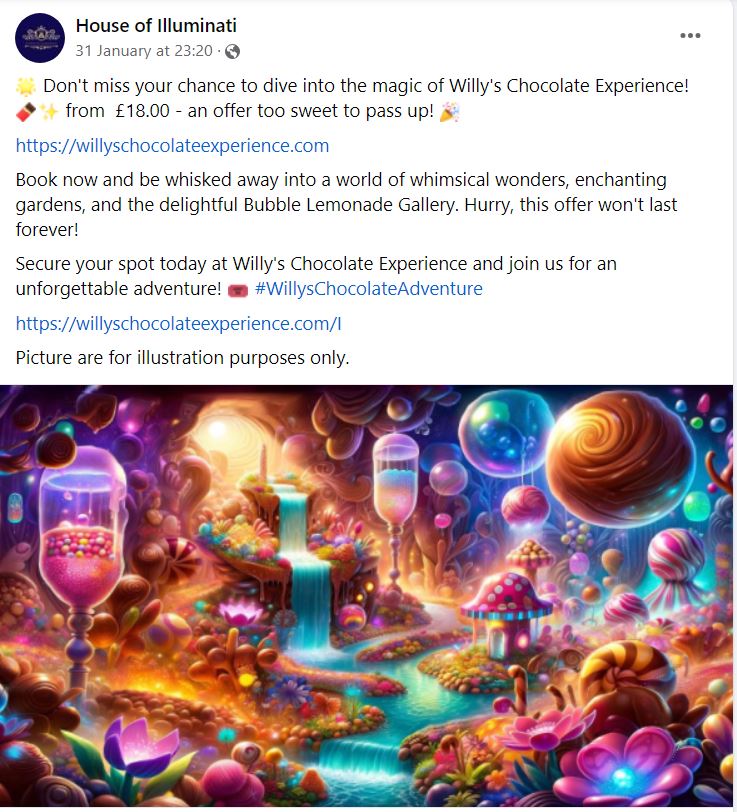

The event was organised by a company called House of Illuminati and promoted through their website and on social media. The event’s promotion was characterised by the use of images generated using artificial intelligence (AI).

In this post I’m going to look at the role generative AI played in the promotion of Willy’s Chocolate Experience and reflect on what went wrong, what generative AI is, and how it can be misused for profit.

House of Illuminati

House of Illuminati was registered as UK business on 20th November 2023 (link). Alongside House of Illuminati, the company’s director is listed as the active director for Billy De Savage Ltd and Nexuma Holdings, both of which were also incorporated late last year. The nature of these companies is listed as, among others, book publishing and advertising agencies. 233,084 companies are listed at the same address, a virtual office in London.

House of Illuminati’s website offers some insights into the services they offer, including ‘unique experiences’, ‘unforgettable moments’ and ‘transformative events’:

Their website features a mix of AI-generated images alongside other photographic images. Out of the 4 non-AI images in the above screenshot of real people, at least 3 were taken from other sources. None of these are credited.

| Image | Actual Source |

| Eventologists.co.uk, 2017 |

| Vimeo, 2016 |

| YouTube, 2021 |

However, it is the AI-generated images that are interesting here, as is it is these same types of images that were used to promote the Glasgow event and inevitably led to guests feeling let down and misled.

AI Images and Willy’s Chocolate Experience

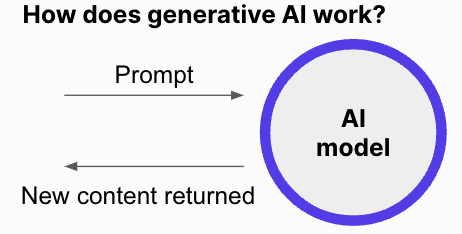

Generative AI is a “type of artificial intelligence technology that can produce various types of content, including text, imagery, audio and synthetic data” (source). It refers to the use of a web service or app to generate images based off a prompt you provide.

Users type in a textual prompt, such as ‘a rainy forest’ and the tool generates an image off of this prompt. Popular tools include Dall-E 3, from OpenAI who also created the well-known ChatGPT tool. These tools are seldom free, and users often have to sign up as they are resource intensive.

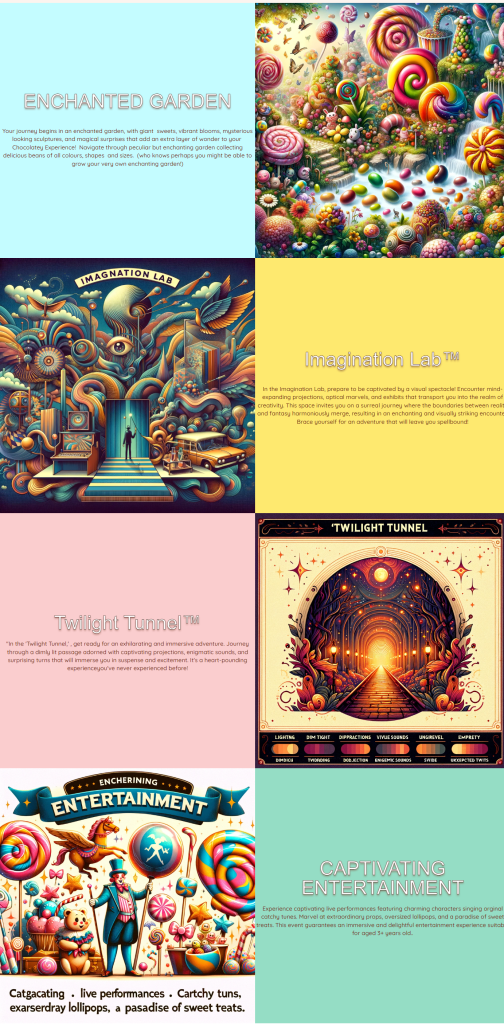

When you visit the event website for Willy’s Chocolate Experience you see the following:

The event website features four AI generated images: Enchanted Garden, Imagination Lab, Twilight Tunnel, and Captivating Entertainment. One key giveaway to these image’s AI provenance is the text. The images nonsensically refer to:

- IMAGNATION LAB

- DIM TIGHT, DIPPRACTIONS, VIVUE SOUNDS, UNGIREVEL, EMPRETY

- DIMDICLi, TWDRDING, DODJECTION, ENIGEMIC SOUNDS, SVIIDE, UKXEPCTED TWITS

- ENCHERINING ENTERTAINMENT

- Catgacating • live performances • Cartchy tuns, exarserdray lollipops, a pasadise of sweet teats.

AI tools are notoriously bad at generating text and although they can generate letters, they struggle to generate meaningful words. This is because these AI image generators are trained on image data, and do not inherently learn how to produce text. This can be contrasted to ChatGPT, which is a large language model (LLM) trained on textual data.

These images that portray creative, imaginative scenes are reminiscent of key scenes in the 1971 film Willy Wonka & the Chocolate Factory, such as the famous tunnel scene, chocolate room, and inventing room. House of Illuminati also posted to various Facebook pages to advertise the event. These include ‘Things to do in Scotland with our children’, ‘What’s On In Scotland’, ‘Things To Do Glasgow’, and ‘What’s on around Glasgow’.

Out of the 8 Facebooks posts I found from House of Illuminati promoting the event, only once in a disclaimer that required clicking the ‘See more’ button on Facebook did they address the promotional images’ provenance:

I could not find anywhere else on their website or in their social media posts where House of Illuminati say that not only are these images not real, but they are also not graphic representations of what the actual event would entail. Given both the photographic images above have been lifted from their sources without credit, and these AI images have been shared with only one hint at their provenance, it does not portray House of Illuminati in a positive light.

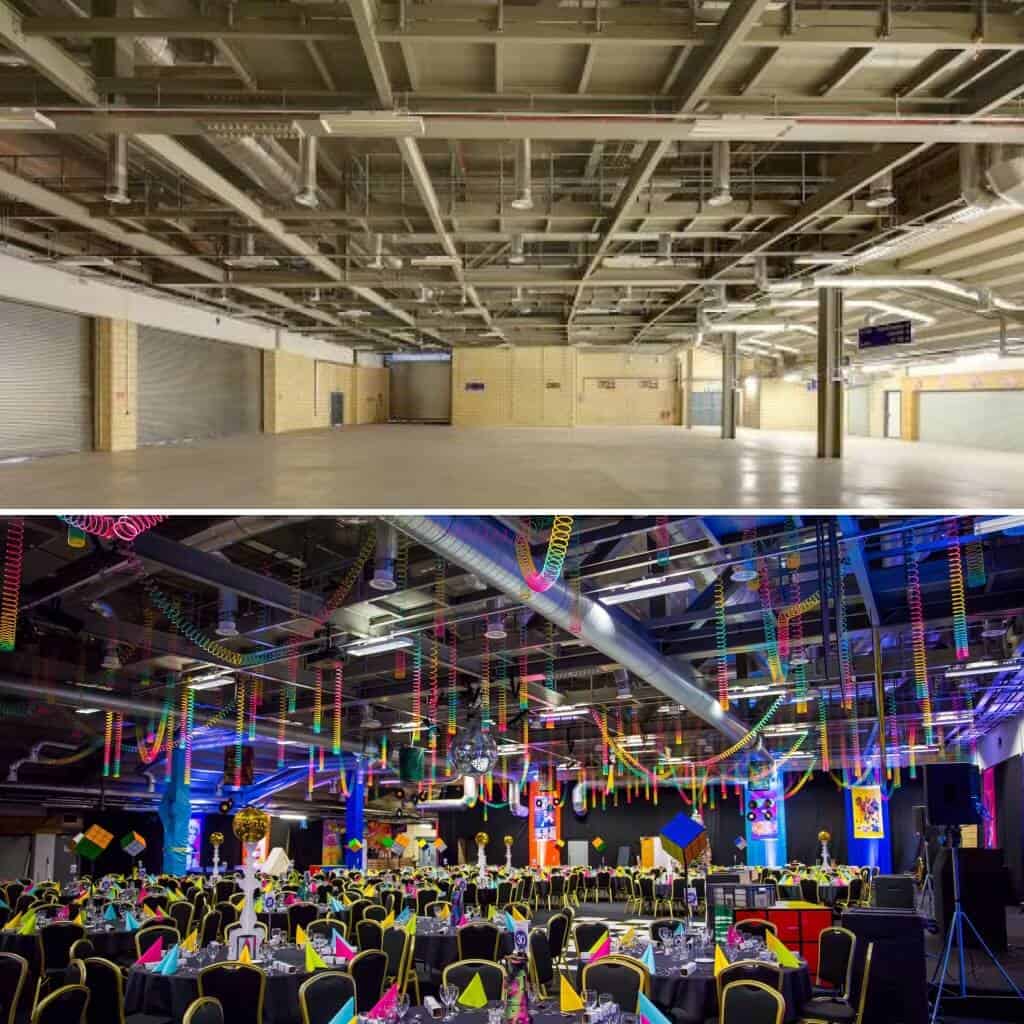

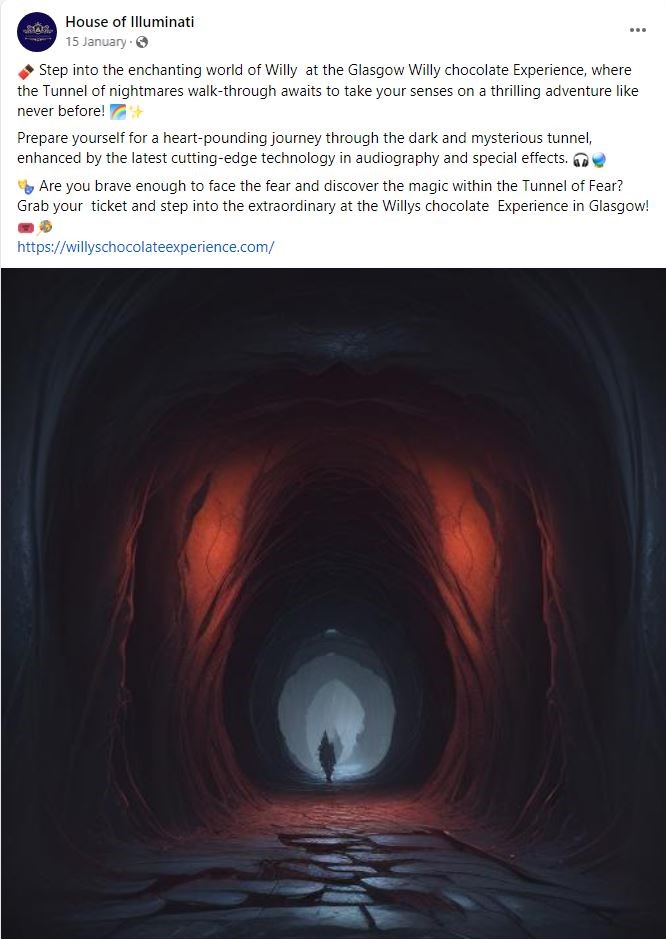

At this point, you may think ‘these images are obviously not real’, but the event also shared much more realistic looking images like this:

This image seems to show the aforementioned Twilight Tunnel, referred to here as the ‘Tunnel of Fear’. There is no indication at all here that this attraction, that will utilise “the latest cutting-edge technology in audiography and special effects” isn’t real. An attraction like the one shown in this image was also not present at the event itself. Clearly, this could be misleading.

Using AI to mislead and deceive

This incident is a good example of using generative AI to potentially mislead others. It is also a remarkably everyday, mundane example; this is important. This is because when we think of AI deception we tend to think of election interference and high-stakes information operations, but sometimes it can be as ordinary as a Willy Wonka experience in Glasgow trying to sell tickets.

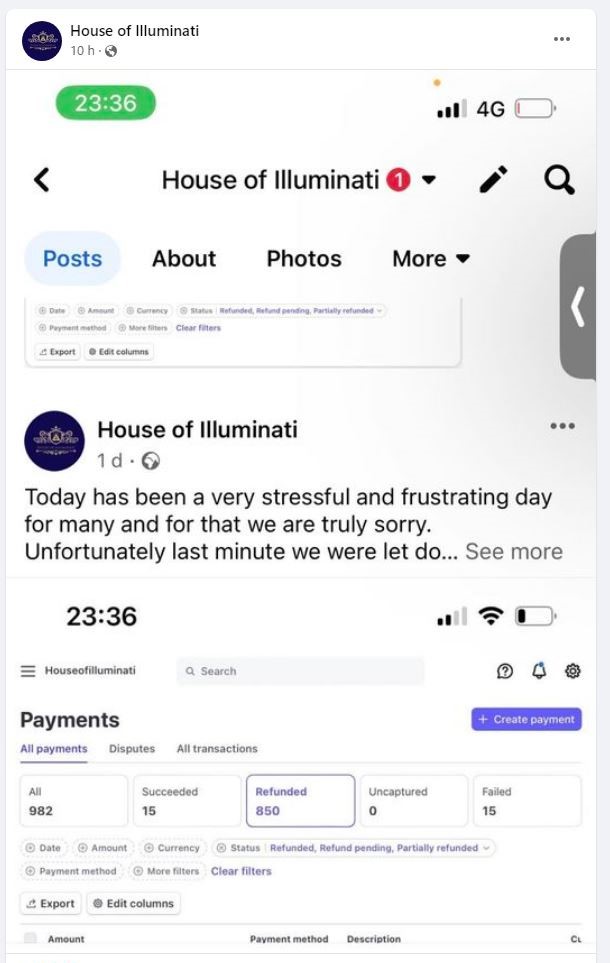

The reality, however, is still serious and this incident has caused real-world financial harm. The rate for tickets was between £18-35 and according to a screenshot shared by House of Illuminati [below], 982 payments were made for the event. This means almost £20,000 was taken from attendees.

The reality is this will keep happening. In this case, it appears that at best people were misled, and at worst they were actively deceived for financial gain. As it becomes easier, cheaper and quicker to generate these kinds of images, the rate at which these incidents happen will only increase.

What can we do?

Content like this should not be able to spread unimpeded. Without AI image labelling, posts like these will continue to get people to spend money under false pretences, whether it’s fake products or exaggerated events. This leaves consumers at the mercy of companies like House of Illuminati who have clearly acted irresponsibly.

There has been progress on this front very recently. In February, Meta (the owners of Facebook, Instagram, Threads, and WhatsApp) announced that AI-generated images that appear on the first three of those platforms will be labelled.

Nick Clegg, the President of Global Affairs at Facebook, wrote that the social platforms will “label images that users post to Facebook, Instagram and Threads when we can detect industry standard indicators that they are AI-generated” (link). This is an important development in redressing the spread of AI images.

It’s important to note here that there is nothing inherently wrong these images. AI images, in my opinion, provide a net good to society but as with any emergent technology, they should not be allowed to spread unregulated across social media platforms.

Regulation also has a role to play here too. As it stands, while frameworks and suggested codes of conduct exist, the use of AI images by businesses is currently largely unregulated. For example, the Advertising Standards Authority (ASA) Code is media-neutral, meaning they don’t distinguish AI generated images from other types. Further, the ASA’s remit does not cover all social media advertising and promotion, leaving posts like this potentially unregulated.

False advertisement is of course nothing new, but the means for the production of images has changed and companies should not be able to use fabricated AI images that bear no resemblance to something they are selling without consequence. We will never be able to stop people using technology to take advantage of others, but a combination of regulation and platform-led change can help mitigate this happening again in the future.

Conclusions

This blog post tracked how a misleading event that led to police involvement was promoted online, the visuals and the materials used, and what we can do to help prevent this happening in the future.

The House of Illuminati Willy’s Chocolate Experience incident offers a good case study of the (mis)use of generative AI images in a UK context. It demonstrates the real-world harms that can be caused by online content and how emergent, developing technologies can wreak havoc when they are not used in a transparent, ethical manner.